The Remarkable Three: A Foundation for Meaningful AI

Related Articles: The Remarkable Three: A Foundation for Meaningful AI

Introduction

In this auspicious occasion, we are delighted to delve into the intriguing topic related to The Remarkable Three: A Foundation for Meaningful AI. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

The Remarkable Three: A Foundation for Meaningful AI

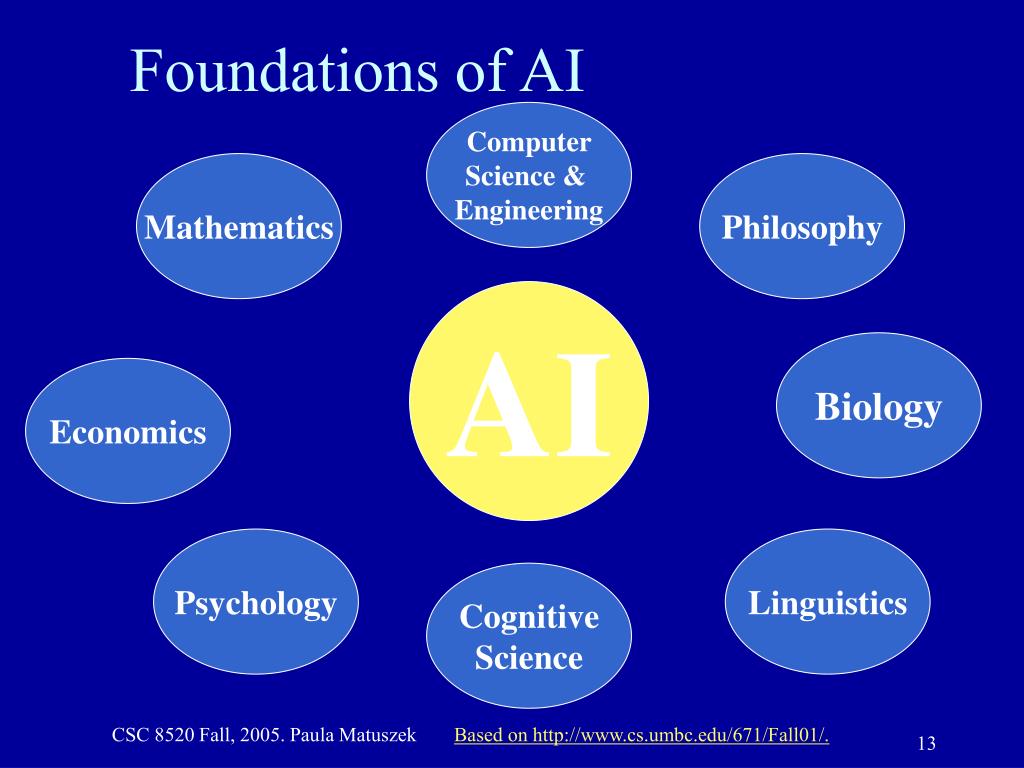

The field of artificial intelligence (AI) is experiencing rapid advancement, with new technologies and applications emerging at an unprecedented rate. Amidst this rapid evolution, three fundamental principles stand out as crucial for developing ethical, responsible, and beneficial AI: transparency, fairness, and accountability. These principles, often referred to as the "remarkable three," form a foundation for building AI systems that are not only powerful but also trustworthy and aligned with human values.

Transparency: Illuminating the Black Box

Transparency in AI refers to the ability to understand how an AI system arrives at its decisions. This is particularly crucial given the complexity of many AI algorithms, often referred to as "black boxes" due to their opaque decision-making processes. Lack of transparency can lead to a lack of trust, as users may be unable to comprehend why a system makes certain decisions or whether those decisions are justified.

Benefits of Transparency:

- Increased Trust: Understanding the rationale behind an AI system’s decisions builds trust and confidence in its outputs.

- Enhanced Explainability: Transparency allows for easier interpretation of AI decisions, making them more understandable and accessible to both developers and users.

- Improved Debugging and Error Detection: Transparent algorithms are easier to debug and identify potential biases or errors, leading to more reliable and robust AI systems.

- Accountability and Responsibility: Transparency enables accountability for the actions of AI systems, allowing for identification and mitigation of potential risks.

Achieving Transparency:

- Model Explainability Techniques: Techniques like LIME (Local Interpretable Model-Agnostic Explanations) and SHAP (SHapley Additive exPlanations) aim to provide insights into the decision-making processes of complex AI models.

- Data Transparency and Provenance: Understanding the source and quality of data used to train AI models is crucial for transparency. This involves tracking data lineage and ensuring data integrity.

- Documentation and Communication: Clear and concise documentation of AI models, their functionalities, and decision-making processes is essential for promoting transparency.

Fairness: Eliminating Bias in AI

Fairness in AI refers to the development and deployment of systems that do not discriminate against individuals or groups based on protected characteristics like race, gender, or socioeconomic status. Bias can creep into AI systems through various sources, including biased training data, algorithmic design choices, and societal prejudices reflected in data.

Benefits of Fairness:

- Equitable Outcomes: Fair AI systems ensure that all individuals have equal opportunities and access to benefits, regardless of their background.

- Reduced Discrimination: By mitigating bias, AI systems can help address existing societal inequalities and promote inclusivity.

- Ethical AI Development: Fairness is a fundamental ethical principle that guides responsible AI development and deployment.

- Improved Trust and Acceptance: Fair AI systems are more likely to be trusted and accepted by society, as they are perceived as unbiased and equitable.

Achieving Fairness:

- Bias Detection and Mitigation: Techniques like fairness metrics and data de-biasing methods can help identify and address biases in AI systems.

- Diverse Data and Representation: Training AI models on diverse and representative datasets is crucial for mitigating bias.

- Fairness-Aware Algorithm Design: Designing algorithms with fairness considerations in mind can minimize the potential for bias in decision-making.

- Regular Monitoring and Evaluation: Continuous monitoring and evaluation of AI systems for fairness are essential to ensure that they remain unbiased over time.

Accountability: Ensuring Responsible AI

Accountability in AI refers to the ability to hold individuals or organizations responsible for the actions of AI systems. This involves establishing clear lines of responsibility for the development, deployment, and consequences of AI technologies. Accountability is crucial for ensuring that AI is used responsibly and ethically.

Benefits of Accountability:

- Ethical Use of AI: Accountability promotes responsible AI development and deployment by discouraging the use of AI for harmful or unethical purposes.

- Mitigation of Risks: Establishing accountability mechanisms helps identify and mitigate potential risks associated with AI systems, such as unintended consequences or misuse.

- Transparency and Trust: Accountability fosters transparency by requiring clear explanations for AI decisions and actions, thus building trust in AI systems.

- Legal and Regulatory Compliance: Accountability helps ensure compliance with relevant laws and regulations governing AI development and deployment.

Achieving Accountability:

- Clear Roles and Responsibilities: Defining clear roles and responsibilities for individuals and organizations involved in AI development and deployment is essential for accountability.

- Auditing and Monitoring: Regular auditing and monitoring of AI systems can help identify and address potential issues related to accountability.

- Transparency and Traceability: Maintaining transparent and traceable records of AI development and deployment processes facilitates accountability.

- Legal and Ethical Frameworks: Establishing clear legal and ethical frameworks for AI development and deployment provides a foundation for accountability.

FAQs about the Remarkable Three:

Q: Why are these three principles so important in AI development?

A: The remarkable three, transparency, fairness, and accountability, are essential for building trust in AI systems and ensuring their responsible and ethical use. Without these principles, AI risks becoming a tool for discrimination, manipulation, and harm.

Q: How can we ensure that AI systems are developed and deployed in a way that upholds these principles?

A: Implementing these principles requires a multi-faceted approach, including:

- Developing ethical guidelines and frameworks for AI development and deployment.

- Investing in research and development of AI technologies that prioritize transparency, fairness, and accountability.

- Educating developers and users about the importance of these principles and how to implement them in practice.

- Establishing regulatory frameworks and oversight mechanisms to ensure compliance with ethical standards.

Q: What are some examples of how these principles are being applied in real-world AI applications?

A: Several organizations are actively working to integrate these principles into their AI systems:

- Google’s AI Principles: Google has developed a set of AI principles that emphasize fairness, transparency, and accountability.

- The Partnership on AI: This non-profit organization brings together researchers, developers, and policymakers to discuss and promote responsible AI development.

- The European Union’s General Data Protection Regulation (GDPR): This regulation emphasizes data privacy and transparency, which are crucial for achieving accountability in AI systems.

Tips for Implementing the Remarkable Three:

- Prioritize Explainability: Strive to develop AI systems that are transparent and explainable, allowing users to understand how decisions are made.

- Actively Mitigate Bias: Implement bias detection and mitigation techniques throughout the AI development process.

- Establish Clear Accountability: Define clear roles and responsibilities for individuals and organizations involved in AI development and deployment.

- Foster Open Communication: Encourage open communication and dialogue about the ethical implications of AI.

- Continuously Monitor and Evaluate: Regularly monitor and evaluate AI systems for adherence to ethical principles and make adjustments as needed.

Conclusion:

The remarkable three, transparency, fairness, and accountability, are not simply buzzwords but essential principles for building trustworthy and beneficial AI. By prioritizing these principles, we can ensure that AI is developed and deployed responsibly, fostering a future where AI technology serves humanity’s best interests. This requires a collective effort from researchers, developers, policymakers, and society as a whole to champion these principles and guide the responsible development and deployment of AI.

Closure

Thus, we hope this article has provided valuable insights into The Remarkable Three: A Foundation for Meaningful AI. We hope you find this article informative and beneficial. See you in our next article!